Ensuring Data Integrity in Microsoft Fabric: Validating Excel Headers in Data Pipeline Before Loading Data into the Destination

Inkey Solutions, March 21, 202571 Views

In data engineering, maintaining data integrity is crucial. One common challenge is ensuring that an incoming file contains all the required column headers before loading it into the destination table.

In this blog, we will implement a solution in Azure Fabric where we:

- Load an Excel file into Lakehouse.

- Extract and validate the column headers.

- Load data into the destination table only if all required headers exist.

- Send a notification if any required header is missing.

Scenario & Goal

We have an Excel file stored in Azure Blob Storage, and we need to verify if it contains the required headers before loading it into the destination table.

Conditions for Loading Data:

The file must contain these mandatory headers:

- Productid

- Productname

- Materialtype

- Size

- Costperunit

If any required header is missing, the pipeline should send a notification and skip loading the data.

If all required headers exist, the data should be loaded into the destination table.

Implementation in Microsoft Fabric

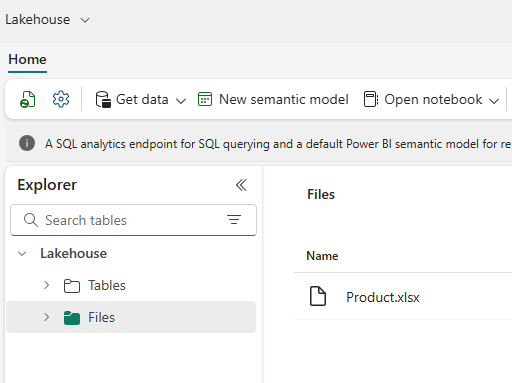

Step 1: Load the Product File into Lakehouse

- Open Microsoft Fabric and navigate to Lakehouse.

- Upload the Product.xlsx file into the Files section.

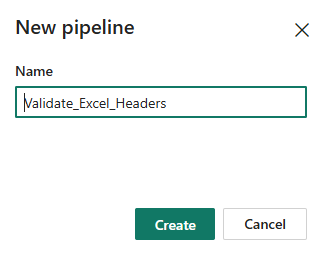

Step 2: Create a Data Pipeline

- Go to Data Factory in Azure Fabric.

- Create a new pipeline and name it Validate_Excel_Headers.

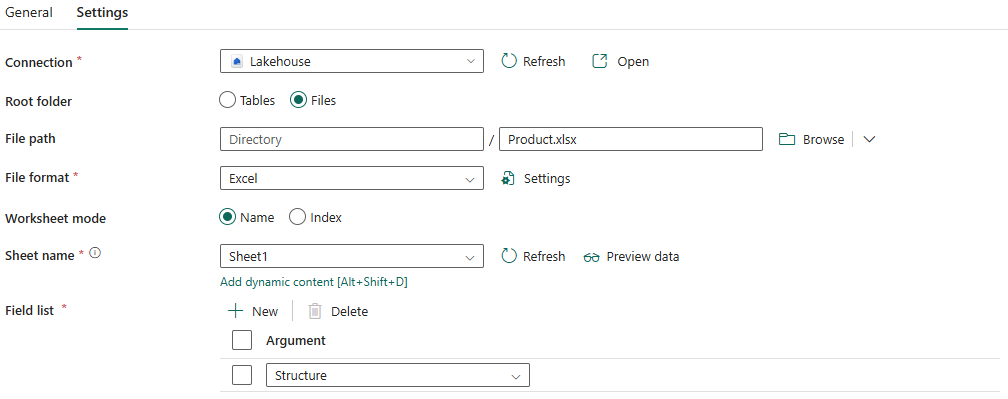

Step 3: Extract Column Headers Using ‘Get Metadata’ Activity

- Add a Get Metadata activity to fetch column headers.

- Set the dataset type as Excel and configure the linked service to point to the Lakehouse file.

- In the Field List, select “structure” to retrieve the file schema.

Step 4: Store Column Headers in a Variable

In this step, we will loop through the extracted column headers from the metadata activity and store them in a variable for validation.

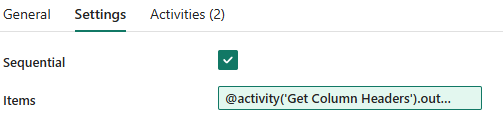

Add a ForEach Activity

- Drag and drop the ForEach activity into the pipeline.

- Connect it to the Get Metadata activity (to ensure it runs after extracting the column headers).

- Set Items as:

@activity(‘Get Column Headers’).output.structure

- Enable Sequential Execution if required.

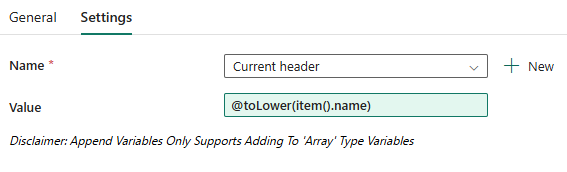

Inside ForEach: Add Append Variable Activity

- Inside the ForEach activity, add an Append Variable activity.

- Configure it as follows:

- Variable Name: Current header

- Value: @toLower(item().name)

- This ensures all headers are stored in lowercase for consistent validation.

Inside ForEach: Add Set Variable Activity

- Add a Set Variable activity after Append Variable.

- Configure it as follows:

- Variable Name: All Headers

- Value: @variables(‘Current header’)

- This consolidates all extracted headers into the All Headers variable.

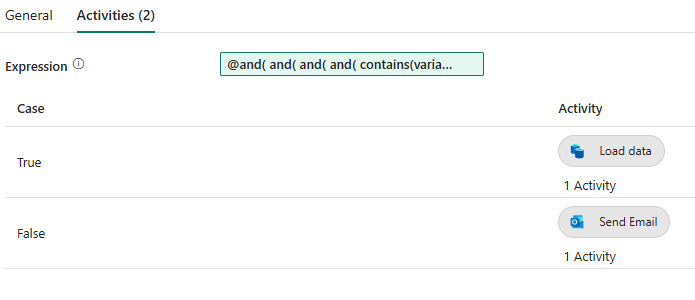

Step 5: Validate Headers Using an If Condition

- Add an If Condition activity.

- Use the following expression to check if all required headers exist:

@and(

and(

and(

and(

contains(variables(‘All Headers’), ‘productid’),

contains(variables(‘All Headers’), ‘productname’)

),

contains(variables(‘All Headers’), ‘materialtype’)

),

contains(variables(‘All Headers’), ‘size’)

),

contains(variables(‘All Headers’), ‘costperunit’)

)

Step 6: Define Actions for If Condition

- If the condition is True → Proceed with loading data into the destination table.

- If the condition is False → Send a notification.

Branch 1: If Headers Are Correct (Load Data into Destination)

- Add a Copy Data activity.

- Set the source as the validated Excel file in Lakehouse.

- Set the destination as the final table (e.g., a SQL table or another Lakehouse table).

Branch 2: If Headers Are Missing (Notify)

- Add an Office 365 Outlook activity to send an email notification, informing users that the uploaded file is missing required headers and needs to be corrected.

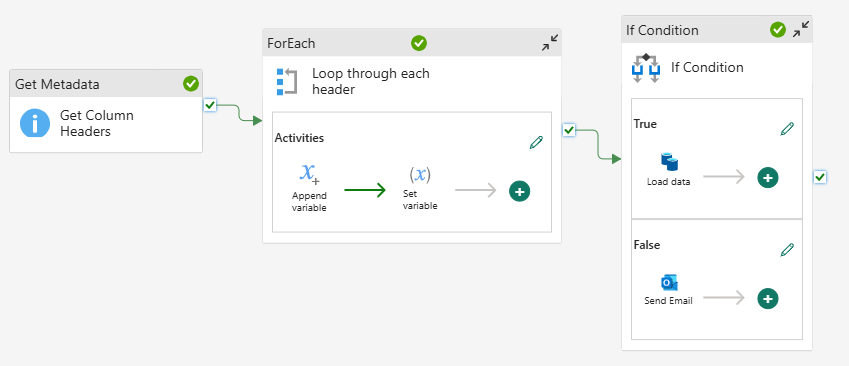

Final Pipeline Overview

Here is the complete pipeline view with all steps configured: