Copy Data Dynamically From Multiple Sources To Multiple Sinks In ADF

WebSiteAdmin, December 5, 20232759 Views

In this blog we are going to learn how to implement Dynamic Mapping to copy data from multiple sources to sinks in ADF.

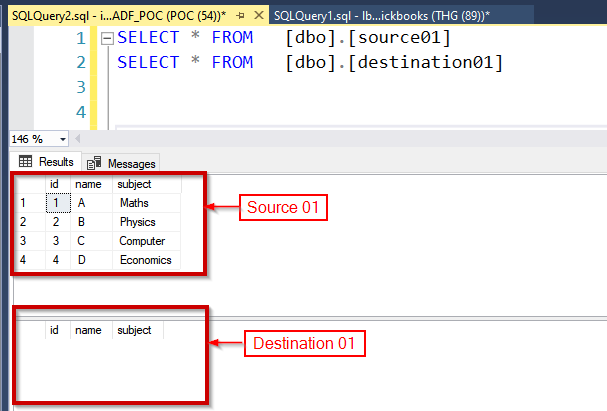

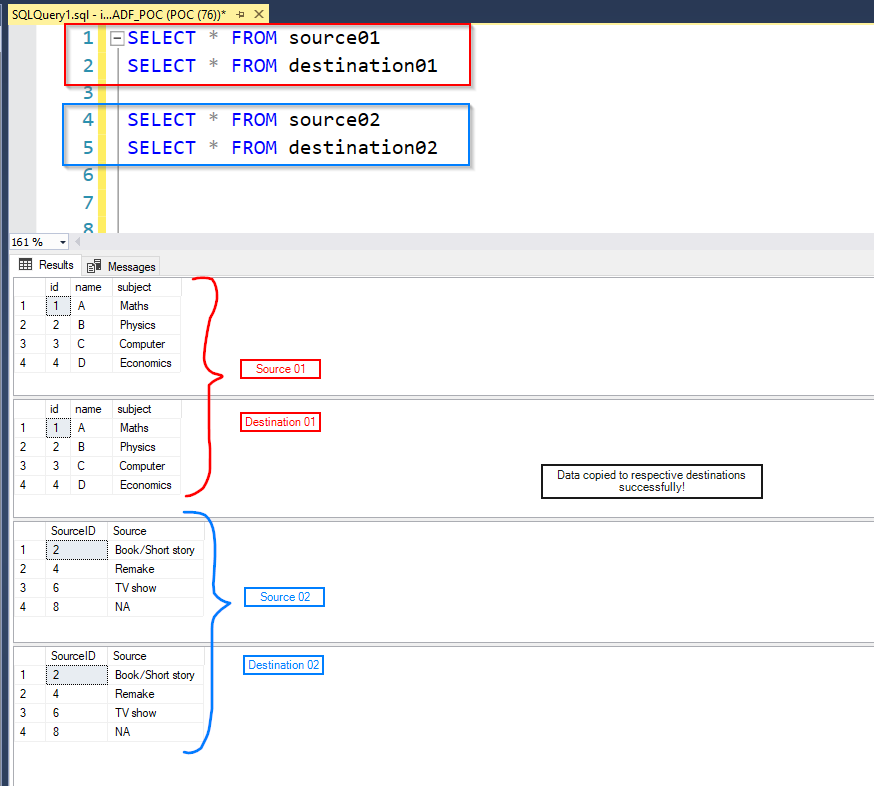

The below attached images show that there are 2 pair of source-destination tables. Now the source table is holding some data while the destination tables is blank, but its schema is similar to its respective source table. [Refer below images]

Now our requirement is to copy data from all the source tables to their respective destination tables in ADF. To fulfil this requirement, we usually create a copy data activity and pass the source & destination datasets – which basically copies the data from source to destination. But now let’s say there are 10 source & 10 destination datasets, one needs to create the Copy Data Activity 10 times & pass the respective source & destination. This approach is inherently redundant and repetitive in nature. We could resolve this by making it dynamic.

Making Copy Data Dynamic

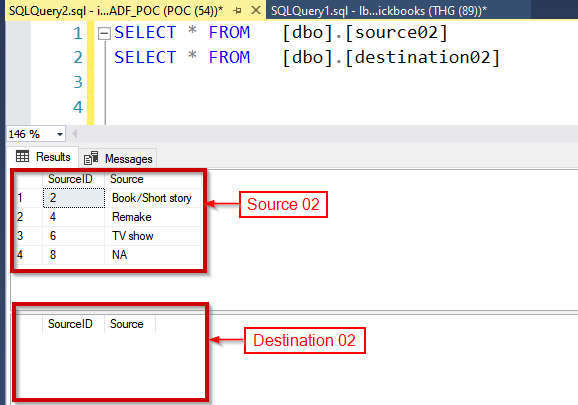

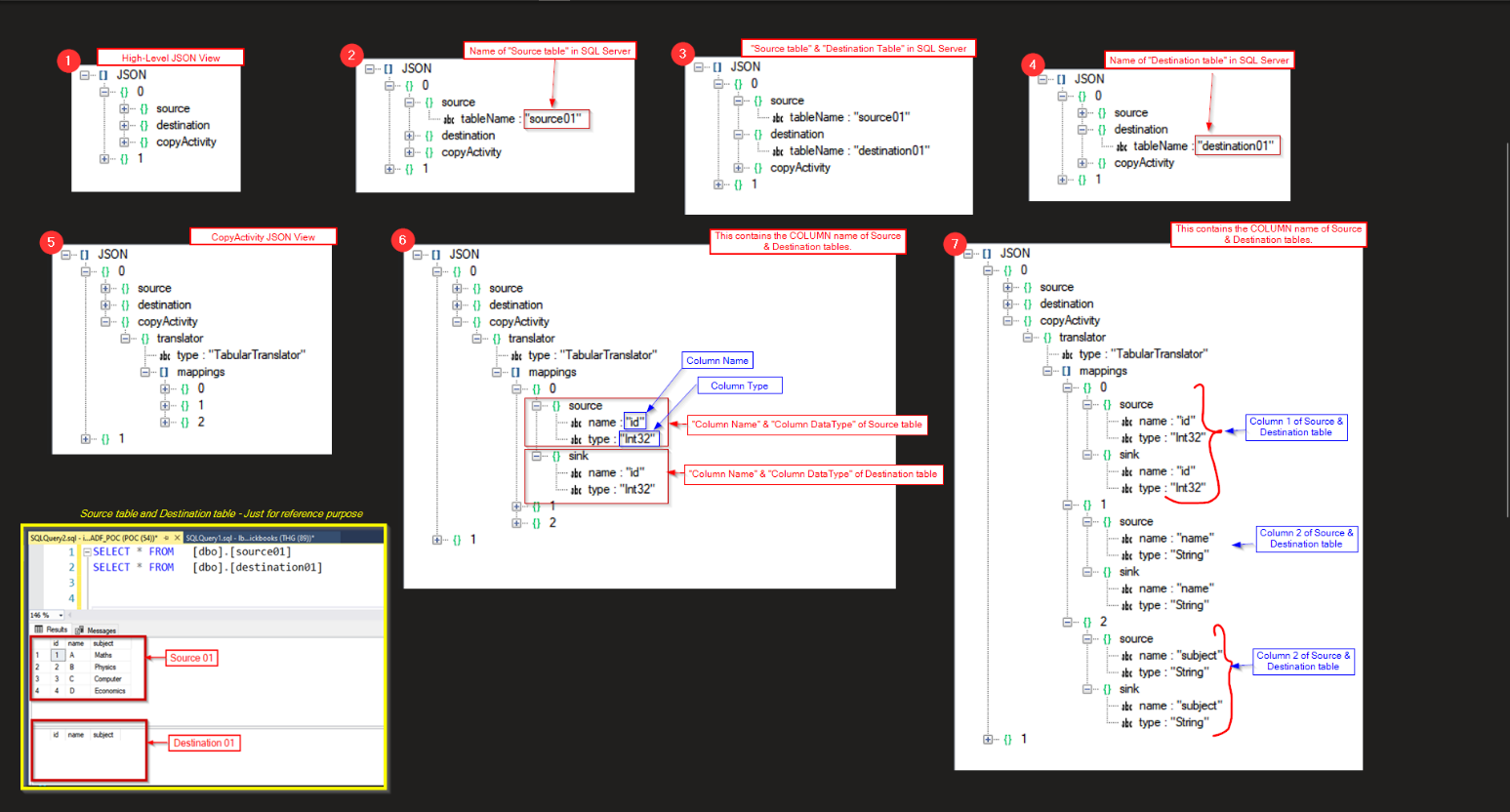

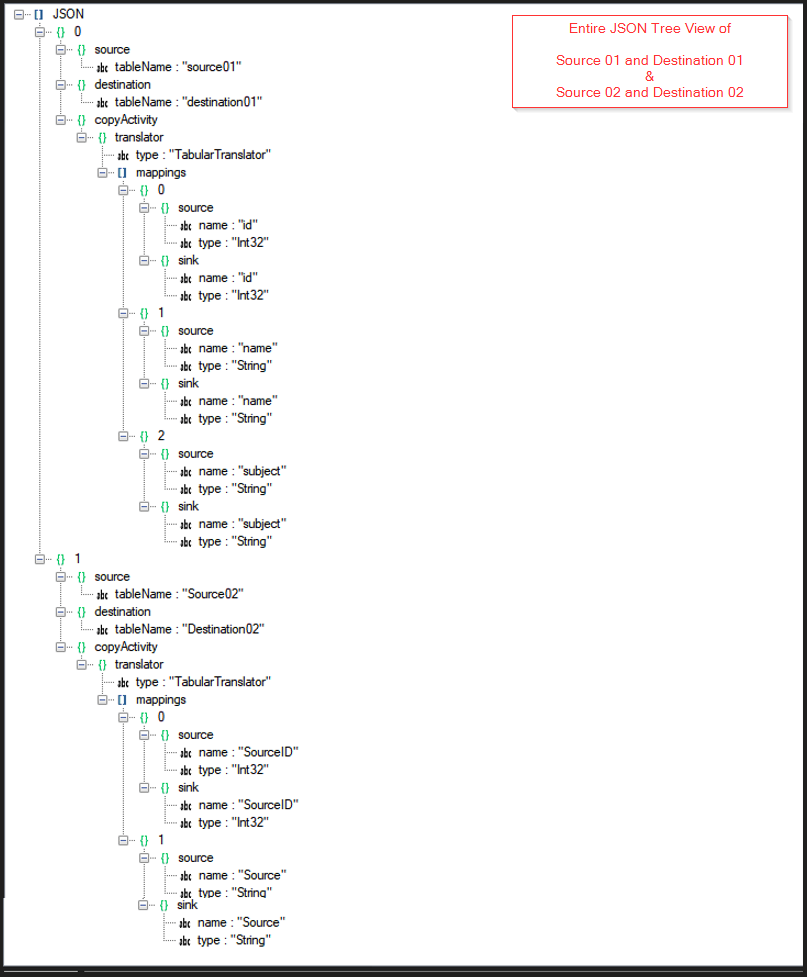

To make copy data dynamic we need to create a JSON with source and destination tables, this is the required format & based on this JSON we shall copy data from multiple source to their respective destination using a single Copy Data Activity

[

{

"copyActivity": {

"translator": {

"mappings": [

{

"sink": {

"name": "id",

"type": "Int32"

},

"source": {

"name": "id",

"type": "Int32"

}

},

{

"sink": {

"name": "name",

"type": "String"

},

"source": {

"name": "name",

"type": "String"

}

},

{

"sink": {

"name": "subject",

"type": "String"

},

"source": {

"name": "subject",

"type": "String"

}

}

],

"type": "TabularTranslator"

}

},

"destination": {

"tableName": "destination01"

},

"source": {

"tableName": "source01"

}

},

{

"copyActivity": {

"translator": {

"mappings": [

{

"sink": {

"name": "SourceID",

"type": "Int32"

},

"source": {

"name": "SourceID",

"type": "Int32"

}

},

{

"sink": {

"name": "Source",

"type": "String"

},

"source": {

"name": "Source",

"type": "String"

}

}

],

"type": "TabularTranslator"

}

},

"destination": {

"tableName": "Destination02"

},

"source": {

"tableName": "Source02"

}

}

]

Once the JSON is created, we need to follow the below mentioned steps to dynamically copy data from multiple sources to their appropriate destinations:

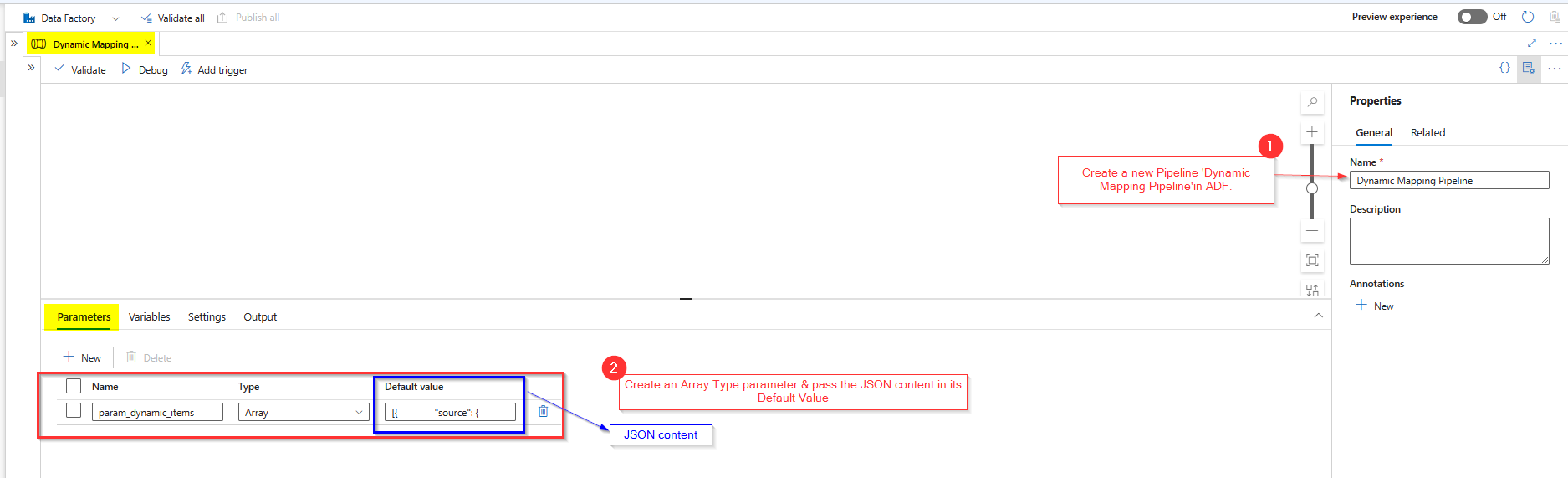

- Create a new pipeline & rename it to “Dynamic Mapping”.

- Inside the Pipeline Parameters section, create a new parameter [Array Type] “param_dynamic_items” and add the JSON content that we created above in “Default value”.

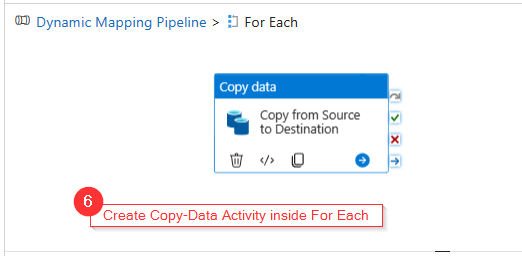

- Add a ‘For-Each’ activity in the pipeline.

- Select the ‘For-Each’ activity & configure the Items property in the Settings section to Pipeline Parameter “param_dynamic_items” that holds the JSON Content.

- Add a ‘Copy Data’ activity inside the ‘For Each’ activity.

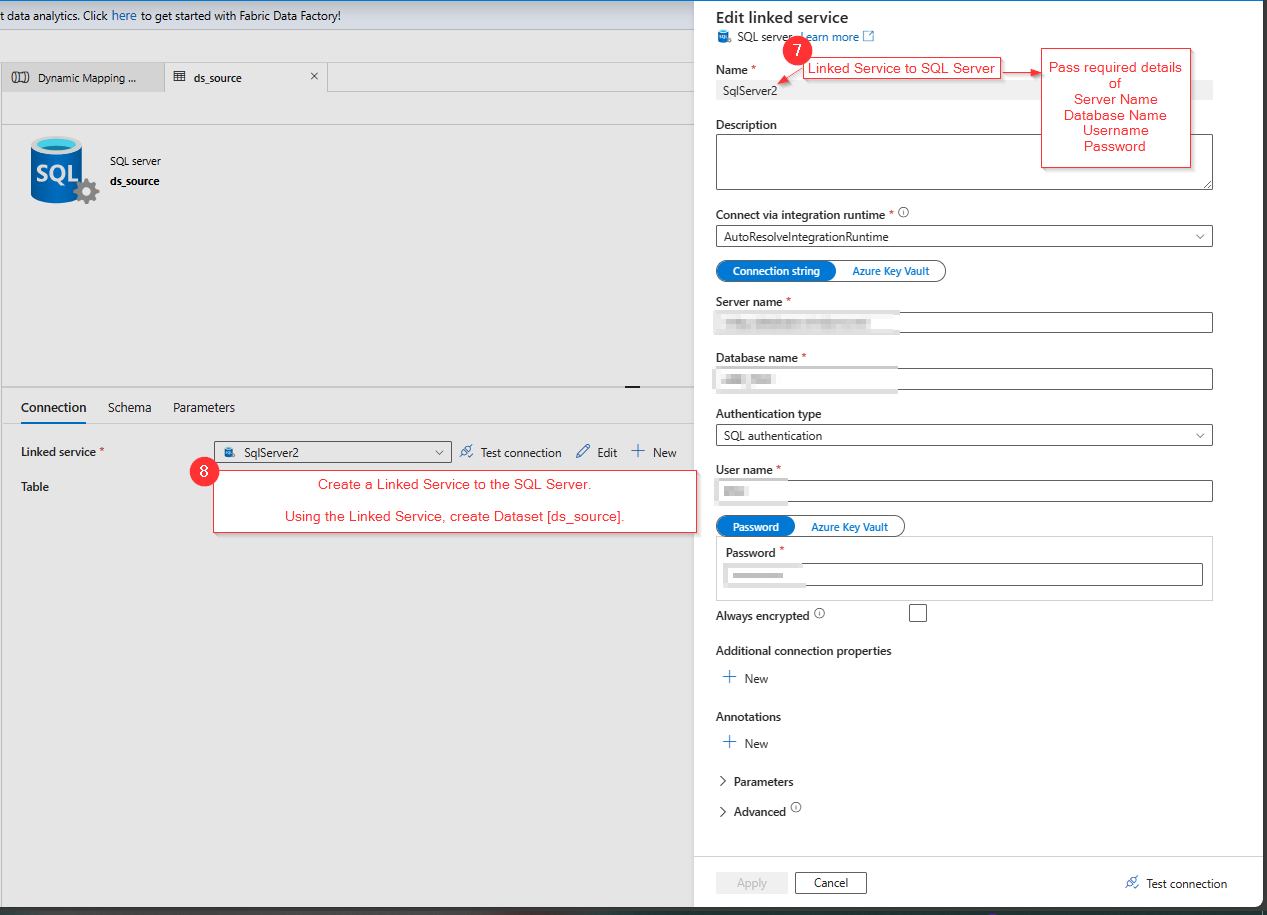

- Create a ‘Linked Service’ to SQL Server.

- Using this ‘Linked Service’, we will create 2 parameterized datasets: one for source & other for destination.

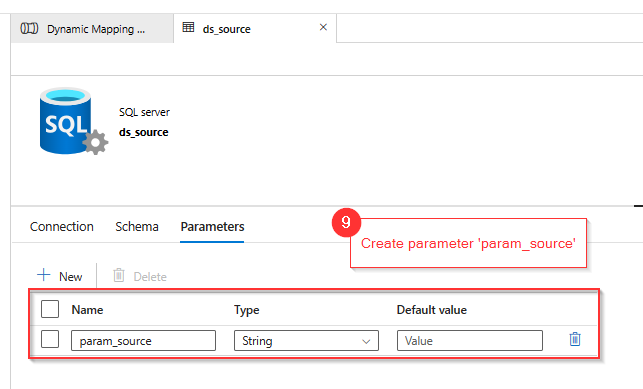

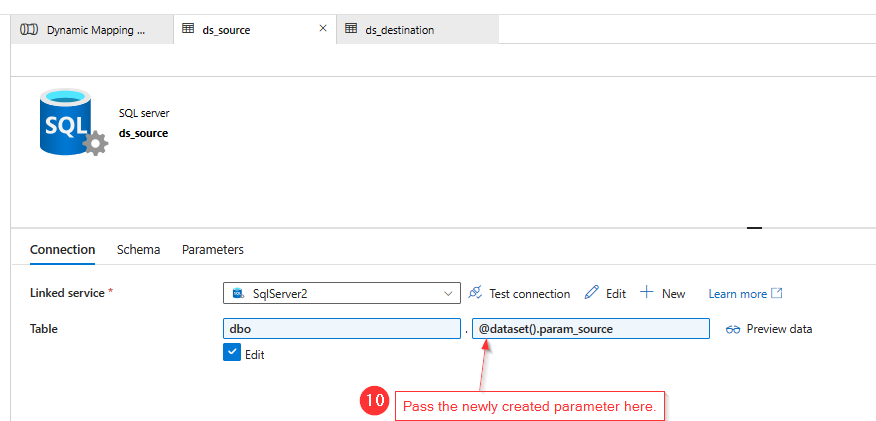

- Create a new dataset, “ds_source” and then create a dataset parameter “param_source” of type String.

- Inside the Connection section of dataset “ds_source”, we will pass the dataset parameter “param_source” to pass the source table values dynamically.

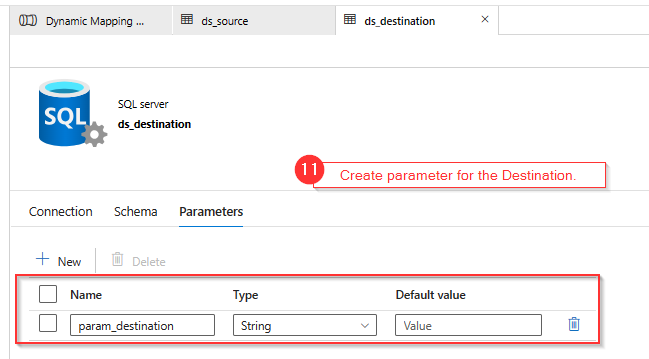

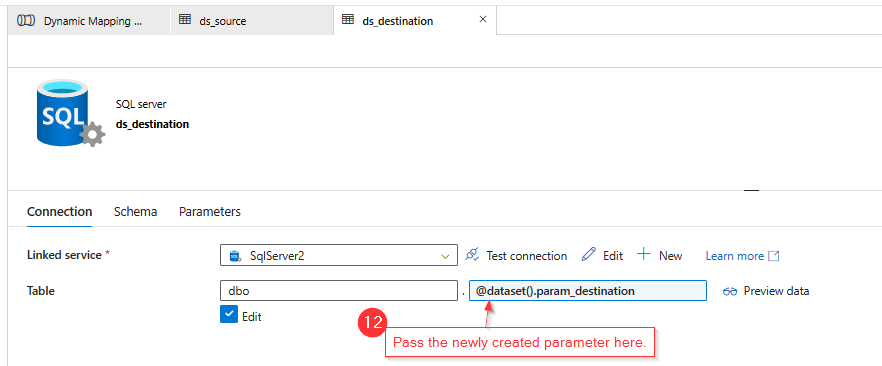

- Create a new dataset, “ds_destination” and then create a dataset parameter “param_destination” of type string.

- Inside the Connection section of dataset “ds_destination”, we will pass the dataset parameter “param_destination” to pass the destination table values dynamically.

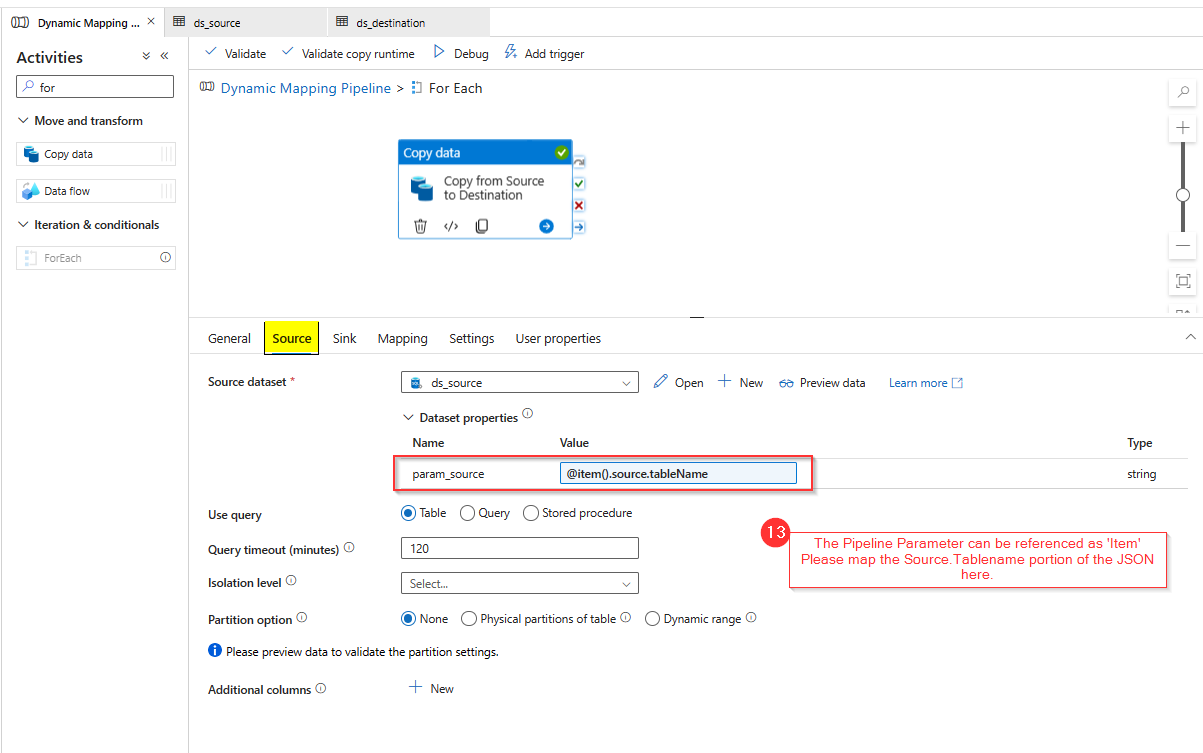

- Now, go back to ‘Copy Data Activity’ & inside the source section, select the source dataset to ‘ds_source’ & pass the dataset parameter to “@item().source.tableName”

[This will be pointing to the source tables mentioned in the JSON content]

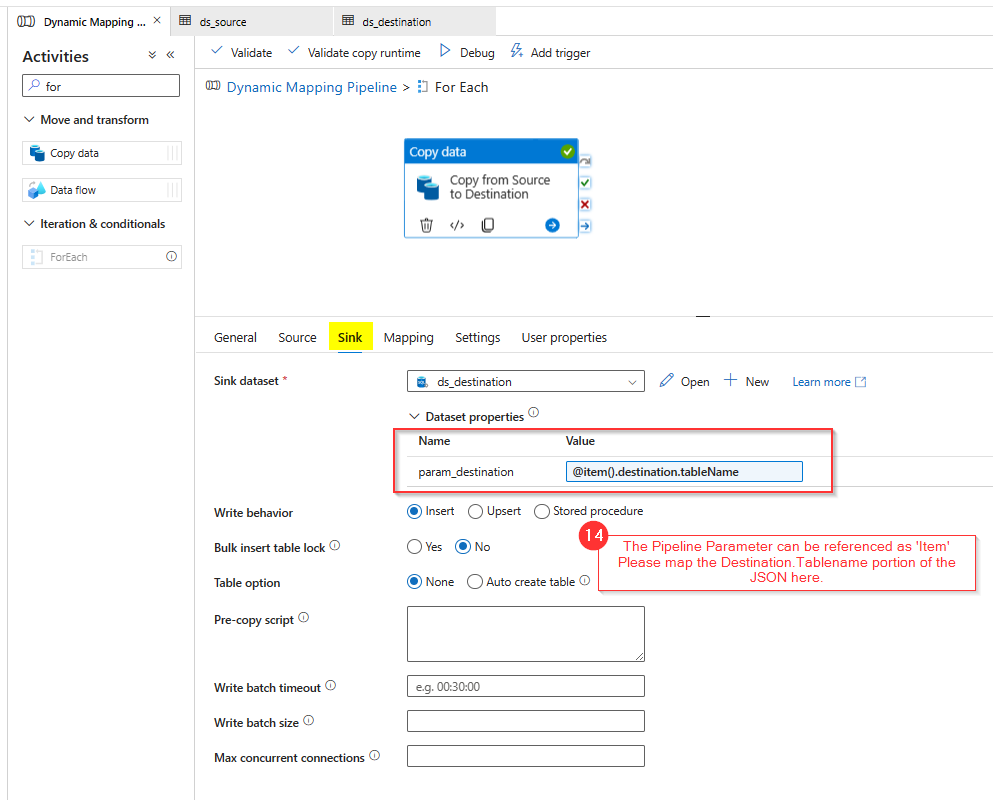

And inside the Sink section, select the source dataset to ‘ds_destination’ & pass the dataset parameter to “@item().destination.tableName”.

[This will be pointing to the destination tables mentioned in the JSON content]

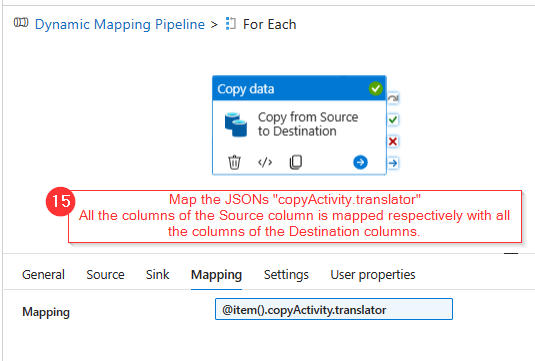

- In the mapping section of the ‘Copy Data’ activity, pass “item().copyActivity.translator”

[This will be pointing to the copy tables mentioned in the JSON content]

- Now, everything is successfully configured at the pipeline Level.

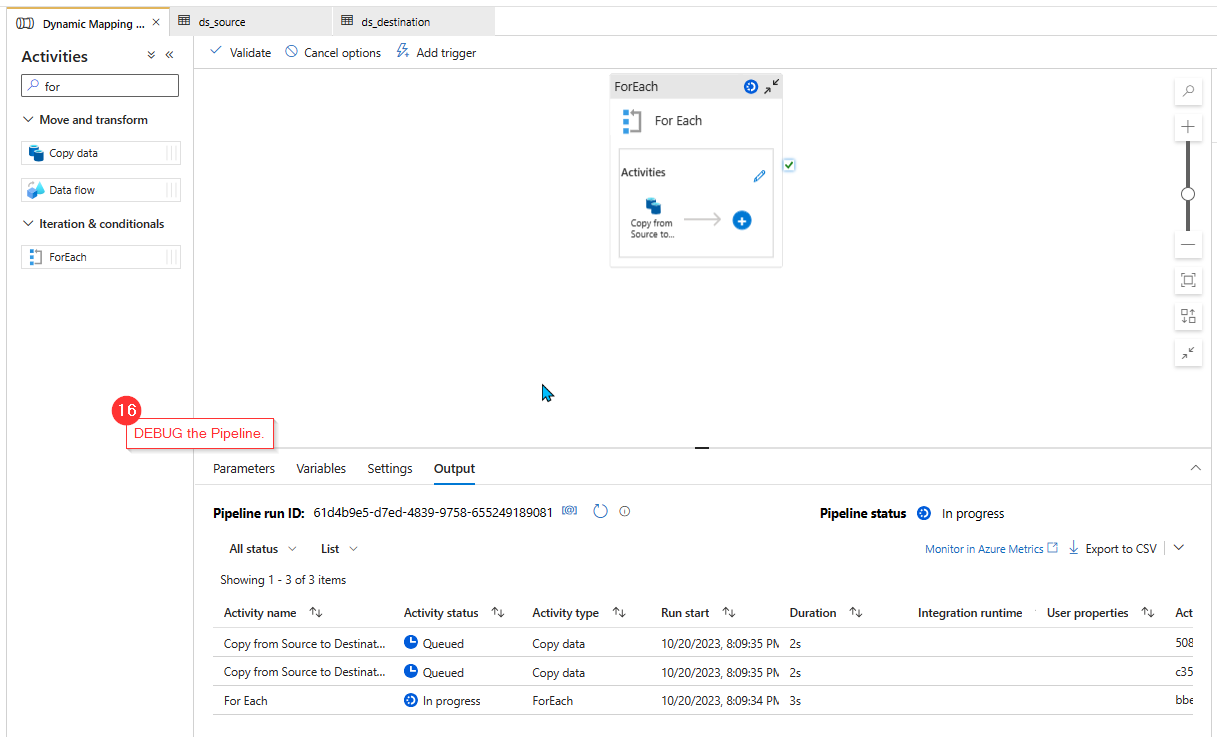

- Debug the pipeline & note the status of the pipeline run.

- Flow ran successfully!

- Check whether data from all the sources are copied to the respective destination or not.

In this way we can copy data from multiple sources to multiple sinks in ADF dynamically using a single ‘Copy Data Activity’.

ATM Inspection PowerApp to ease ATM inspection and report generation process.

https://www.inkeysolutions.com/microsoft-power-platform/power-app/atm-inspection

Insert data into Many-to-Many relationship in Dynamics CRM very easily & quickly, using the Drag and drop listbox.

http://www.inkeysolutions.com/what-we-do/dynamicscrmaddons/drag-and-drop-listbox

Comply your Lead, Contact, and User entities of D365 CRM with GDPR compliance using the GDPR add-on.

https://www.inkeysolutions.com/microsoft-dynamics-365/dynamicscrmaddons/gdpr

Create a personal / system view in Dynamics CRM with all the fields on the form/s which you select for a particular entity using the View Creator.

http://www.inkeysolutions.com/what-we-do/dynamicscrmaddons/view-creator